Table of Contents

Work with Multiple DICOM Stacks

Surface3D supports working with multiple DICOM stacks for a single object. For example, if you are tracking the bones of the knee, you might have a stack of CT images at the hip for determining the hip center, a stack at the ankle for determining the ankle center, and a larger stack at the knee for modeling the distal femur, proximal tibia, and patella. Each stack must be entered as its own scan data item in the session, and all images in each stack must have the same dimensions, spacing, and orientation.

Working with Multiple Stacks

Support for multiple stacks works by using the Reference Coordinate System (RCS) defined by the DICOM standard. The RCS is a coordinate system embedded in the person being scanned. The origin of the RCS can vary, but the X axis points from right to left, the Y axis from anterior to posterior, and the Z axis from inferior to superior. When you define landmarks and points of interest (POIs) in Surface3D, their coordinates in the RCS are saved to the subject file. When you load an image stack and segment and crop it to model a particular bone, Surface3D calculates the transform from the RCS to the cropped image data. The reference frame of the cropped image data has its origin in the lower-left corner of the first slice, with X pointing to the right, Y up, and Z increasing through the image stack. This transform is called the patient matrix and is saved to the subject file when the image data is saved. Thus for each trackable object you can have the following elements:

- a set of landmarks and POIs from different image stacks, each one with coordinates in the RCS

- a single masked, cropped image stack containing segmented image data for the object

- a surface model with vertices expressed in the reference frame of the segmented image data

- a patient matrix with the transform from the RCS to the segmented image data. This transform is used by all the DSX applications to convert the landmarks and POIs from the RCS into the local frame of the object.

Example: Modelling the Femur

If you use Surface3D for all your image processing, here is a sample procedure for modelling the femur that includes digitizing the hip center:

- Select the femur.

- Select the knee stack from the list of scan data items.

- Segment the femur. Use the segmentation tools to label, mask, and crop the voxels. Segmenting image data and creating surface models is covered in this tutorial.

- Save the segmented image file for the femur. Surface3D will also save the patient matrix to the subject file at this time.

- Create and save the surface model for the femur. The patient matrix is saved to the subject file during this step as well, in case you did not save the segmented image file (e.g., when doing bead-based tracking with Locate3D).

- Select File→Load Supplemental Image and select the hip stack.

- Use the **Landmarks** widget to define a landmark at the hip center.

- Save the subject file.

- If you want to go back to the knee stack, you can either use File→Load Supplemental Image again, or simply unselect and reselect the femur object in the Object Configuration widget. Once you’ve saved the segmented image data for the femur, it will automatically be loaded when you select the femur from now on.

Note: If you want, you can load the hip stack first and define the hip center landmark, then load the knee stack to segment the femur. When you’re done with the hip, use File→Load Supplemental Image or the deselect/reselect method to load the knee stack.

Third-Party Segmentation Software

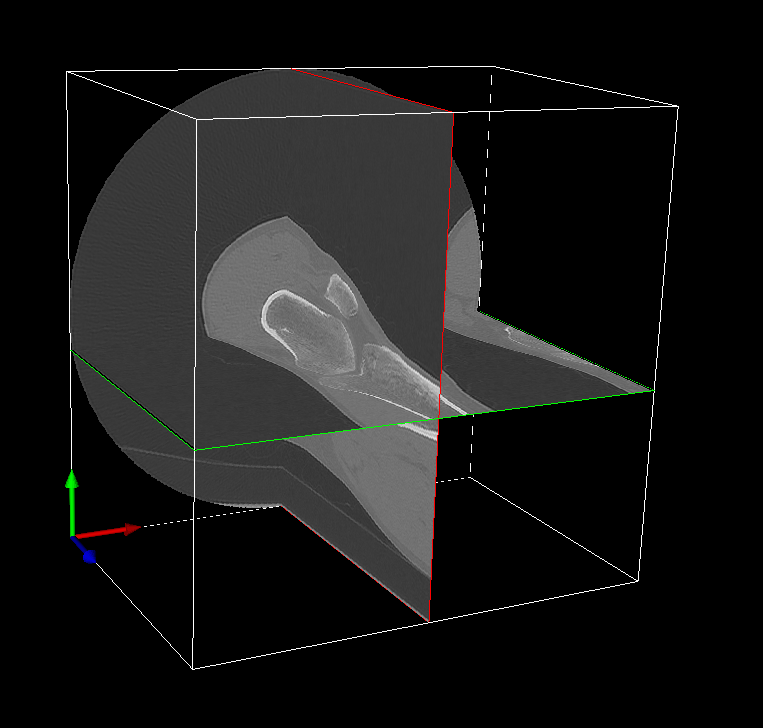

If you use third-party software (e.g., Mimics, ScanIP) to segment the image data and create surface models, you will need to manually specify the patient matrix in order to include landmarks that are defined in other image stacks. In the DICOM convention, within each image slice the origin is in the top-left corner, with the X axis pointing to the right, the Y axis pointing down, and the Z axis pointing out the back of the slice. In the DSX convention, the DICOM frame is rotated 180 degrees about the X axis, and moved down to the lower-left corner. Thus the X axis points to the right, the Y axis points up, and the Z axis points out the front of the slice. The origin of the entire stack is moved to the lower-left corner of the slice with the smallest Z value (within this local image frame, not within the RCS). Thus, the Z axis always points up through the stack, as shown here:

The patient matrix for each trackable object is the transform from the RCS to this DSX frame of the segmented image data (the image stack that is used to generate digitally reconstructed radiographs (DRRs)). You will need to calculate this transform yourself, using the Image Orientation and Image Position fields in the DICOM header, as well as the Y translation, 180-degree X rotation, and [possibly] Z translation mentioned above (whether or not the Z translation is needed depends on the Image Orientation field).

There is a way to make Surface3D calculate the patient matrix that may be easier than calculating it yourself.

- In the third-party segmentation program, output a mask file (.tif) of the entire knee stack that is a binary marking of femur voxels.

- In Surface3D, load the knee stack, then load the mask file (File → Load Label).

- Mask and crop the knee stack, then save the cropped image data to the subject file. This cropped image data should be the same as what you would get from your third-party program.

- You can then use this image file for generating DRRs in X4D. The patient matrix will be saved to the subject file when you save the image file.

If you will also be using surface models created in a third-party program, make sure their vertices are expressed in the reference frame of the cropped image data. The Image/Surface Match widget in Orient3D can be used for checking the frame of the surface model, and modifying it to match the frame of the image data.