Visual3D Tutorial: Looking at Large Public Datasets

| Language: | English • français • italiano • português • español |

|---|

Biomechanics is coming into a new age, where large datasets are the norm and individually processing the files can be tiresome and time consuming. Captured over long periods of time, datasets can be inconsistent, which makes it difficult to automate working with them.

In this tutorial, we will be going through common issues seen while working with large public biomechanics datasets, through the use of a large dataset released which tracks the full body gait of both stroke-victims and healthy participants.

Large Datasets

The dataset used for this tutorial is the raw motion data collected by (Van Criekinge et al , [reference]), which includes the full-body motion capture gait of 138 able-bodied adult participants and 50 stroke-survivors. They include Full Body Kinematics (PiG Model), Kinetics, and EMG Data across a wide variety of ages, heights and weights. This is an open sourced dataset, which allows for free and open science, supports the growing biomedical research community and increases the ability for scientists to duplicate results of tests.

This dataset is unique in its size and quality for an open source dataset. Large datasets are essential for scientific observations to be able to accurately draw conclusions, and are becoming the norm within the biomechanical community. Because of their nature as large collections of data, there are often unique problems relating to working with such datasets, which is to be explored here. Datasets such as these can be generated over a long period of time, where people, standards or equipment may change. Large datasets can also be the culmination of several different projects, which further leads to inconsistencies. The most frequent issues we found were due to inconsistencies within the measured dataset: it can be difficult to keep a rigid structure to any large dataset, but inconsistencies can wreck the ability to automate tasks, so we’ll show how you can effectively process the data in large datasets like this.

Dataset

File Naming Conventions

A common inconsistency in large datasets can be the usage of filenames. Filenames should convey information about a file in a manner that can be easily referenced, eg: calibration files should be named differently from motion files so that they can be separated, and motion files should have a trail number in their file name (if applicable), etc.

In this dataset, we found inconsistencies within filenames, as well as an error relating to the file name. Particularly within the stroke-victims, there was no consistent naming convention across all of the motion files, other than all including the letters “BWA”. How the trail number was conveyed was done in a variety of different methods, including separating with spaces, underscores or nothing, as well as prepending a “0” to the trial number. Ultimately this forces the user to use wildcards to collect all the motion files at once, and limits their ability to individually select motion files.

It was also observed that Stroke Victim 47 (TVC47) had a mislabeled calibration file. This must be renamed from “BWA (0)” to something following their calibration conventions, such as “TVC-47 Cal 0”.

Inconsistent Subject Prefixes (Stroke)

All the markers in the static files provided with the data are prefixed with the subject ID however the dynamic files are not, this will prevent the built model from linking with the dynamic files. A pipeline can be made to prepend the subject IDs to all the marker points in the dynamic files. The subject ID is stored in the PARAMETERS::SUBJECTS::NAMES signal within the dynamic files, however they use a dash instead of an underscore, “BWA-2” vs “BWA_2” these still wont match. String expressions can be used to convert the subject ID strings to match the static files.

Set_Pipeline_Parameter_To_List_Of_Files

/PARAMETER_NAME=DYNAMIC_FILES

/FOLDER=C:\Users\shane\Documents\Tutorial Study\50_StrokePiG

/SEARCH_SUBFOLDERS=TRUE

/FILE_MASK=*BWA*.c3d

/ALPHABETIZE=FALSE

! /RETURN_FOLDER_NAMES=FALSE

;

Inconsistent Subject Prefixes (Healthy)

The static files provided for the healthy subjects do not have subject prefixes, however a random selection of dynamic files do, in this case it makes sense to strip the subject prefixes from the select files that have them instead of adding them to every other file.

Building Models

The data set usies the Plug in Gate models set, unfortunately not enough markers were attached to the upper body to model, so only a model of the lower body can be made, see the tutorial here to walk through the process of making a lower body plug in gait model. The subset of healthy subjects used in this tutorial has three markers for the pelvis as opposed to the four used for the stroke subjects; two separate models need to be made. The default values provided in the tutorial can be used to build the model, as the subject data will be entered in the processing pipeline

Processing C3Ds into CMZs (Stroke)

Instead of manually processing each C3D file, assigning the models running the computations and saving them as CMZs a pipeline can be developed to automate the process. Since the parameters needed to accurately calculate the models are stored within the dynamic files, they must be opened first and the data retrieved so it can then be applied to the model.

Processing C3Ds into CMZs (Healthy)

Missing Parameters

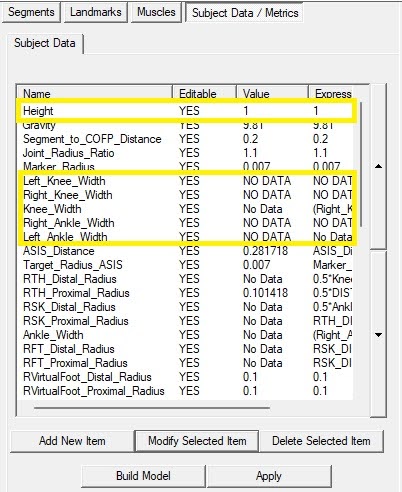

Upon inspection some of the dynamic files were missing or have incorrectly named parameters needed to calculate the model (height, mass, ankle widths, and knee widths) in the case of the stroke subjects this data was provided in a spreadsheet so the values can be entered manually and the models recalculated, unfortunately no such data exists for the healthy subjects so files missing the parameters must be excluded (SUBJ37 and SUBJ42)

Building Gait Events

Due to inconsistent force plate data, gait events cannot be reliably determined using kinetics, kinematics must be used instead. A tutorial on the process can be found here